Holographic Base-building UX in Real Life

- Gavin Lottering

- Jan 13

- 9 min read

You know the UX of base building in video games like no man's sky or dune awakening, where the user has a holographi overlay that mimics a building component and then places it? How could that work if it were real? Provide specifics, both theory and engineering.

The holographic base-building UX seen in games like No Man’s Sky and Dune: Awakening is an elegant fusion of speculative augmented reality (AR), spatial computing, and intuitive UI/UX design. Translating this to real-world functionality requires a multi-disciplinary engineering approach involving optics, sensor fusion, AI-driven spatial mapping, and human-computer interaction. Here’s how it could work, grounded in specific engineering and theoretical principles:

🔧 Core Engineering Stack Behind Real-World Holographic Base Building

1. User Interface Layer: Spatial AR Projection System

Theory:

This would be built on augmented reality (AR) frameworks, enabling the projection of interactive, semi-transparent 3D models over real environments.

Engineering:

Headset-Based Display: Devices like Apple Vision Pro or HoloLens use waveguide optics and microprojectors to overlay digital images onto transparent visors.

Laser Plasma or Volumetric Displays (for future): These could project actual volumetric light fields into the air, but today they’re in early development stages and very limited in scale.

Gesture Tracking: Using LiDAR, depth sensors, or EMG (electromyography) armbands to allow users to “grab” and manipulate objects in space.

2. Environment Mapping and Anchoring

Theory:

This relies on simultaneous localization and mapping (SLAM) and scene understanding, key to AR spatial UX.

Engineering:

Depth Sensing: AR devices constantly map the user’s surroundings using stereoscopic cameras and ToF (Time-of-Flight) sensors.

Persistent Anchors: Components placed (e.g., a wall panel) are anchored to real-world coordinates using cloud anchors (e.g., ARCore/ARKit), so they “stay” in place over time.

AI-based Semantic Segmentation: ML models classify terrain types (flat ground, inclined slope, existing structure) to offer valid placement suggestions.

3. Holographic Object Library & Physics-Aware Placement

Theory:

Combines CAD-like object manipulation with real-time physics simulation to ensure that what you build is feasible and stable.

Engineering:

Prefab Component Library: Pre-defined 3D models of walls, doors, solar panels, etc., stored in cloud repositories.

Collision and Support Detection: Physics engines (like Unity’s Havok or NVIDIA PhysX) detect if the component placement would lead to collapse or invalid overlap.

Snap-to-Grid or Magnetic Alignment: AR UX applies vector-based alignment logic to snap elements to compatible anchors or surfaces.

4. Command & Control System: Multi-Modal Inputs

Theory:

Real-time interactivity needs natural user input systems, enabling intent inference.

Engineering:

Voice Commands (NLP): “Place solar panel here” or “rotate structure 90 degrees” uses speech recognition combined with spatial parsing.

Gesture Inputs: Pinch, drag, or rotate via hand tracking (e.g., HoloLens 2’s 25-point hand tracking model).

Eye Tracking: Gaze-based interaction for precision selection (integrated in Vision Pro and HoloLens).

5. Construction Execution: Autonomous Robotics Interface

Theory:

Holographic planning transitions to physical construction using robotic control layers.

Engineering:

Digital Twin Integration: AR blueprint becomes a digital twin, directly executable by construction bots (e.g., Boston Dynamics’ Spot with custom arms).

Robotic Path Planning: Systems like ROS (Robot Operating System) parse spatial blueprints and create motion paths for robotic arms, drones, or 3D printing robots.

Material Verification: Sensor feedback ensures real-world tolerances match the virtual design.

6. System Architecture

Layer | Technologies |

Hardware | AR Headset (Vision Pro, HoloLens), Haptic Gloves, Drones, LiDAR units |

Software | Unity/Unreal Engine, SLAM algorithms, Computer Vision, NLP |

Cloud | Azure Spatial Anchors, AWS RoboMaker for robotics backend |

AI | Vision Transformers for scene understanding, LLMs for natural command parsing |

🧠 Theoretical Enhancements (Near-Future)

Haptic Feedback in Air: Ultrasonic phased arrays to simulate “touch” when placing virtual components.

Brain-Computer Interfaces: Intent-driven AR via neural signal interpretation (e.g., CTRL-Labs).

Swarm Robotics: Multiple autonomous bots building in parallel from AR plans.

✅ Example Workflow (User Journey)

User scans environment → Headset builds 3D mesh of surroundings.

User activates base mode → UI overlays component menu.

User selects “solar array” → Holographic blueprint appears, physics engine validates support.

User drags & places → Component snaps to flat surface, visual feedback shows stability.

User confirms → Blueprint sent to on-site construction bot for execution.

If you’re interested, I can sketch out a technical schematic of this system or build a simulation prompt for something like Unreal or Unity.

Is there a way to do it without autonomous robots, but using some additional understading of physics?

Yes — you can achieve a real-world analog of the holographic base-building interface without autonomous robots, by focusing instead on augmented human guidance systems empowered by physics-aware AR. This shifts the workload from machines to humans while still using advanced sensing and spatial computing to make building highly intuitive, safe, and precise.

Let’s break down how this would work:

🔍 Core Concept: Human-in-the-Loop Holographic Construction

Instead of robots building the structure, humans do — but every step is guided by AR overlays, real-time physics feedback, and smart tools that assist rather than automate.

⚙️ System Breakdown (No Robots, Physics-Aware)

1. Physics-Embedded Holographic UI

Rather than showing just a model, the AR interface actively integrates real-world physics constraints — load-bearing limits, material tolerances, torque, gravity vectors, etc.

Engineering Stack:

Real-time structural analysis: Finite Element Method (FEM) or simplified physics engines simulate load paths and stress.

Material-aware feedback: Each AR object is tagged with physical properties (mass, stiffness, fragility).

Live constraint visualization: If a component would fail or be unstable, the AR UI renders it in red, or shakes it to indicate rejection.

2. Smart Tools with AR Sync

Instead of using robots, human builders use tools enhanced with sensors and AR integration.

Examples:

AR-linked levels or rulers: Tools that project exact alignment guides based on the holographic plan.

Gyro-guided drills: Handheld tools that restrict motion if you're off-angle or off-depth.

Projected cut lines: Using lasers or spatial AR to show where to cut or weld.

3. Real-Time Feedback Loop for Human Builders

The AR system constantly evaluates:

Is the human placing the component correctly?

Is the current structure stable under its own weight?

Are there unbalanced loads or shear risks?

Feedback Methods:

Visual cues: Color coding, vibration effects, transparency shifts.

Auditory: Sound alerts for unsafe or optimal placement.

Tactile: Haptic gloves could nudge the user away from bad alignments.

4. Physics-Aided Assembly Logic

Before human construction, the system runs a physics-based simulation to determine:

Build order optimization (e.g., don’t place a roof before walls).

Temporary structural supports needed.

Weight distribution over uneven terrain.

The AR interface then guides the user step-by-step, only allowing components to be “confirmed” in AR if they're feasible and safe.

5. Collaborative Multi-User AR Synchronization

If multiple humans are building:

Everyone’s headset shows the same anchored hologram.

Physics constraints are synchronized in real time.

One person places a support beam; others see its effect (e.g., increased load capacity).

This requires spatial cloud anchoring + multi-user session management, already in early use in systems like Unity MARS and Azure Spatial Anchors.

🧠 Bonus: Advanced Human-AI Copilot

The system could include a voice/gesture-based copilot:

“Hey Atlas, is this load safe?”

“Show me where to place next.”

“What happens if I remove this beam?”

The AI would run quick simulations and give feedback in plain language, driven by physics engines and building code logic.

🏗️ Real-World Inspirations

Fologram: AR for masonry guidance — masons follow real-time holographic guides without automation.

Trimble XR10 (HoloLens-based): Used in construction for overlaying BIM (Building Information Models) directly on job sites.

Daqri Smart Helmet (retired, but conceptual benchmark): Assisted industrial workers via AR.

🔚 Summary

You don’t need autonomous robots if you can:

Precisely guide humans using AR overlays

Simulate physics in real-time

Use smart tools that enforce alignment, angles, and placement rules

Let an AI handle constraint logic and build order suggestions

This approach is immediately feasible and scales better for complex tasks requiring human dexterity or judgment.

Would you like a visual concept diagram of how this system would be laid out, or a simulation scenario that could be developed in Unity or Unreal?

What would it take to have a handheld device that could create material out of energy, enough to build a wall or door for instance?

Creating material from energy with a handheld device that can build real physical structures like a wall or door is one of the most extreme future-tech concepts—essentially a handheld matter replicator. To ground this in engineering reality and speculative physics, here's what it would take:

🧪 Core Principles: Matter from Energy

Einstein’s mass-energy equivalence, E = mc², tells us that energy can be converted into mass. But doing this at usable scales involves mind-boggling energy densities and intricate particle assembly challenges.

⚛️ What You'd Need (Theoretically)

1. Energy Source with Massive Density

To build a 10 kg object (e.g., a small metal door), you'd need:

E = mc² = 10 kg × (3×10⁸ m/s)² = 9×10¹⁷ joules

That's equivalent to over 20 megatons of TNT — a thermonuclear explosion — stored in your handheld device.

Realistic Constraints:

You’d need energy compression tech orders of magnitude beyond current supercapacitors or nuclear batteries.

Fusion or antimatter is theoretically dense enough — 1 gram of antimatter + 1 gram of matter = 180 terajoules — but containment is insanely difficult.

2. Atomic Assembly System ("Molecular Constructor")

You need to assemble atoms, then arrange them into molecules, then form bulk materials like wood, steel, or polymer.

Requirements:

Subatomic particle stream (electrons, protons, neutrons) created via particle accelerators or fusion reactions.

Nucleosynthesis: Building stable atoms (e.g., carbon, iron) from protons and neutrons — this occurs in stars or particle colliders.

Atom arrangement: Nanorobotics or ultra-precise field manipulation (like optical tweezers or magnetic traps).

Layer-by-layer synthesis: Like 3D printing at the atomic scale (a theoretical extension of mechanosynthesis from molecular nanotech).

3. Material Blueprints + AI Compiler

You'd need a blueprint for the desired object, compiled down to:

Atomic composition

Structural integrity plan

Assembly sequence

This would be managed by:

A quantum AI or massive neural net that can handle molecular dynamics, bonding energy calculations, and real-time error correction.

🛠️ Realistic Near-Term Approaches (Bridge Tech)

While a true energy-to-matter replicator is centuries off, we can approach this with hybrid tech:

A. Advanced 3D Printers + Compact Material Feedstock

Carry a universal 3D printer and feedstock (e.g., plastic, resin, or metal powder).

AR interface lets you “place” walls or doors.

The printer builds in-place using stored material — not energy-to-matter, but still "on-demand construction."

B. Directed Self-Assembly from Environmental Materials

Device catalyzes the reorganization of raw local matter (soil, rock, air) into desired forms.

E.g., laser sintering sand into glass, or microbial cement formation.

Uses chemically driven construction, not raw matter creation.

🔬 Summary: Requirements for a True Handheld Matter-Creation Device

Component | What's Needed |

Energy | Fusion-scale or antimatter battery |

Particle Generation | High-energy particle source, controllable nucleosynthesis |

Assembly System | Atom-by-atom builder with AI-based feedback |

Blueprint Handling | Quantum computing-level real-time synthesis compiler |

Safety Systems | Radiation shielding, temperature control, quantum error correction |

🧠 Bonus: Speculative Concepts

Zeropoint Energy Harvesters: Extracting energy from vacuum fluctuations.

Quantum Foam Manipulators: Building matter from spacetime fluctuations.

Programmable Matter: Instead of building from scratch, reconfigure modular "smart atoms" into any shape or function.

Would you like me to illustrate a theoretical model for this device — showing components and energy flows?

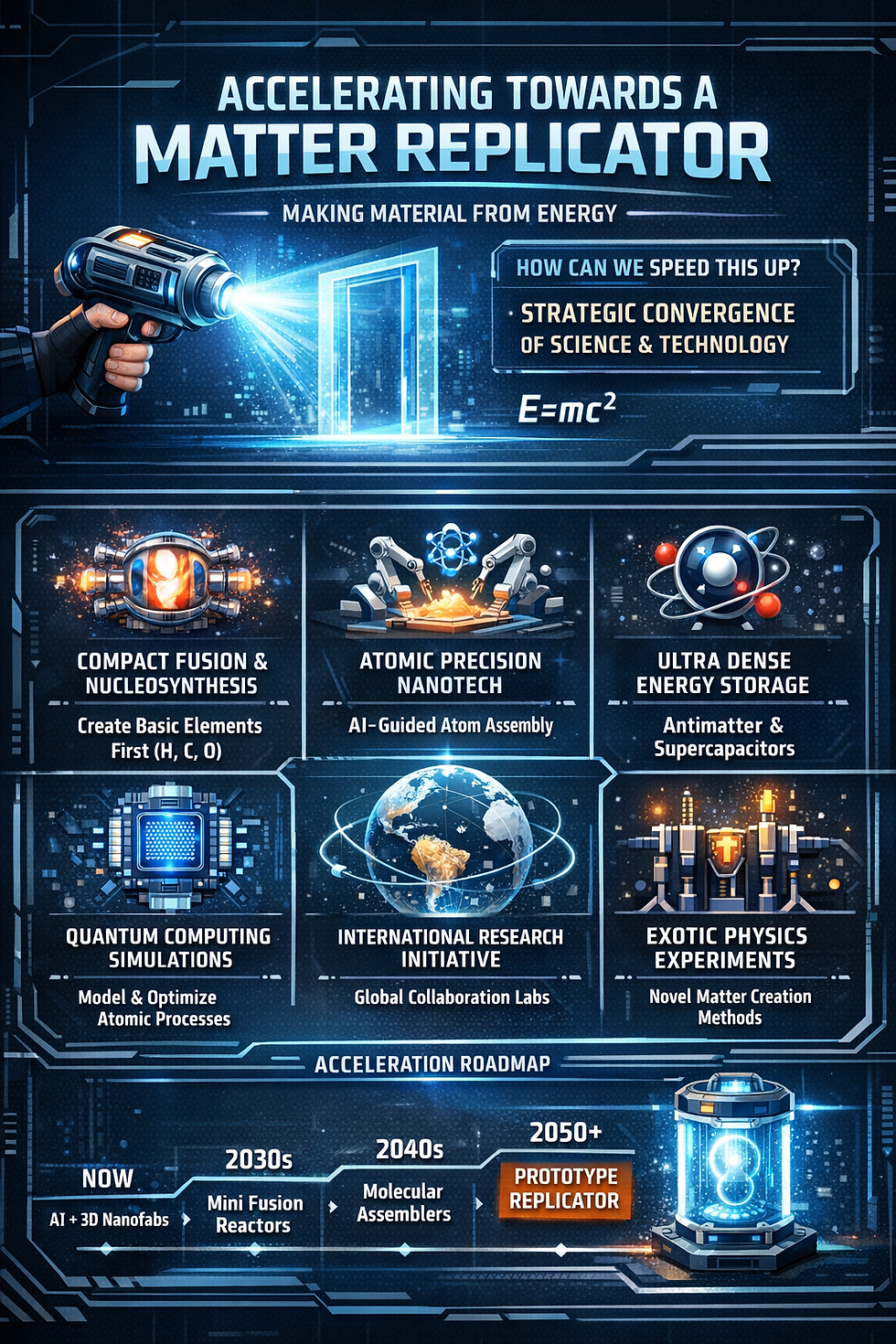

A true energy-to-matter replicator is centuries off? How can we speed this up?

Great question — if we aim to accelerate the development of a true energy-to-matter replicator (turning energy into usable bulk matter), we need breakthroughs across several foundational science and engineering domains, and more importantly, the strategic convergence of those fields.

Here’s how we might speed it up — through focused theory, experimental tech stacks, and new cross-disciplinary programs:

🛠️ 1. Focus on Feasible Matter Creation: Light Elements First

We don’t need to replicate everything at once. Start with simpler atoms and molecules:

Hydrogen, Carbon, Oxygen → Building blocks for organic materials, water, and fuels.

Goal: Prove sustained generation of these atoms from energy in a compact environment (not in particle colliders only).

Acceleration Path:

Invest in compact tabletop fusion and controlled nucleosynthesis systems.

Develop small-scale matter-printing testbeds with limited atom types.

⚛️ 2. New Nucleosynthesis Methods (Beyond Stars)

Problem: All known heavy atoms come from stars or supernovae.

Solution Path:

Develop artificial nucleosynthesis in a lab, without massive energy scales:

Laser-induced nuclear fusion

Muon-catalyzed fusion

Plasma jets with electromagnetic field compression

Key Leverage Point:

Make "star-like" fusion modular and repeatable in lab-scale devices.

🤖 3. Atomic Assembly with Nanofabrication 10⁶× Better

Bottleneck: Even if we make atoms, assembling them into macroscale objects is ultra slow.

Acceleration Strategy:

Fund advanced molecular nanotechnology (Feynman-style).

Develop scalable mechanosynthesis arms that build atom by atom.

Leverage AI to optimize bond sequences, error correction, and self-replication processes.

Precursor Tech: DNA origami, atomic force microscopy, molecular robotics.

⚡ 4. Radical Energy Storage & Management

Key Insight: Creating 1 gram of matter from energy takes 90 terajoules.

Acceleration Route:

Develop ultra-high-density energy storage:

Antimatter containment (Penning traps, magnetic bottles)

Supercapacitive fusion cores

Room-temp superconductors for lossless energy transport

R&D Needed In: Energy metamaterials, quantum batteries, plasma stabilization.

🧠 5. Cross-Disciplinary "Replication Lab" Programs

No single field can do this alone.

Proposal:

Establish international research collectives (like CERN, but for matter replication).

Combine:

Quantum field theorists

High-energy physicists

AI simulation researchers

Nanofabrication engineers

Space materials scientists

Use AI to simulate feasibility: Before building experiments, use digital twins of atomic systems to run 1000s of configs.

🧬 6. Leverage Quantum Computing for Design + Control

Quantum computers could:

Simulate exotic matter behavior

Optimize atomic bonding paths

Run real-time error correction in atomic assembly systems

Near-Term Task:

Build hybrid systems where quantum processors guide nanoscale fabrication — bridging energy → matter translation.

📈 Strategic Acceleration Roadmap

Timeline | Focus |

Now–2030 | Advanced additive manufacturing, AI-guided atomic assembly, fusion miniaturization |

2030–2040 | Tabletop nucleosynthesis, mechanosynthesis prototypes, high-density batteries |

2040–2050 | AI-matter compilers, first programmable atom assemblers |

2050+ | Fusion-powered handheld replicators, early energy-to-matter testbeds |

🚀 Bonus Acceleration Levers

Space-based research platforms (zero-G aids atomic control)

Open simulation platforms for molecular AI modeling

DARPA-style “X-initiatives” for atomic construction tools

International non-military partnerships to avoid tech suppression

Would you like me to build a detailed tech tree diagram showing the specific engineering milestones needed to make this replicator possible by 2100?

Comments